Can I Use AI as My Doctor? State of AI for Health (Evals)

- Dates: 2025-11-12 - present

- Status: unfinished

- Importance: 4

November 2025

I’ve been interested lately in using LLMs like ChatGPT for my thumb sprain and other health issues, so much so that I’ve been working on a side project to evaluate AI’s accuracy for physical therapy. This article is an interesting summary of the latest advances for medical AI: https://www.newyorker.com/magazine/2025/09/29/if-ai-can-diagnose-patients-what-are-doctors-for.

For SecureBio journal club, I sent out that article as well as some slides I made to overview the field from a more “eval science” perspective. Below are the plain text versions of the slides.

Scope

I may keep updating this post with new thoughts and/or progress on AI-medicine and AI-PT, either keeping a history of it and/or recording the latest and greatest in the field. I may keep older writings in this post to record that history.

Journal club slides

Title: AI in medicine: lessons for AI-bio?

A progression of studies hinting LLMs outperform doctors in diagnosis

- Before LLMs: Differential diagnosis generators already exist; these computer programs output diagnoses given structured inputs (Bond et al. 2011).

- Early 2023: Academic studies showed LLMs would pass medical licensing exams (Brin et al. 2023). LLMs = No need for inputs to be structured.

- Late 2023-2024: In medical academia, concerns about exam validity prompted search for different eval designs, with some consensus landing on using real clinical cases as tests with rubric grading (Goh et al. 2024; Cabral et al. 2024; Kanjee et al. 2023). Most of these studies bake in baselining.

- 2025: OpenAI comes out with their own HealthBench.

Video talk by AI “Dr. Cabot” (from New Yorker article)

https://drive.google.com/file/d/19v46NgmGEl6sJsQOsiPl1E_YDOziFAIB/view?usp=sharing

Clinical case eval example (Goh et al. 2024)

Question

- Diagnostic Vignette: A 76M comes to his PCP complaining of pain in his back and thighs for 2 weeks…

- Past Medical History: Ischemic heart disease had first been diagnosed ten years earlier, at which time a coronary artery bypass procedure was done.

- Physical Examination: VITALS: 99.6° F.; pulse was 94/min and regular; BP was 110/88 mmHg. GEN: Well appearing. CARDS: There is a grade III/VI apical systolic murmur…

- Laboratory: WBC of 11.5 x 103 cells /μL; differential of 64% segs, 20% lymphocytes, 3% monocytes, 12% eosinophils and 1% basophil.

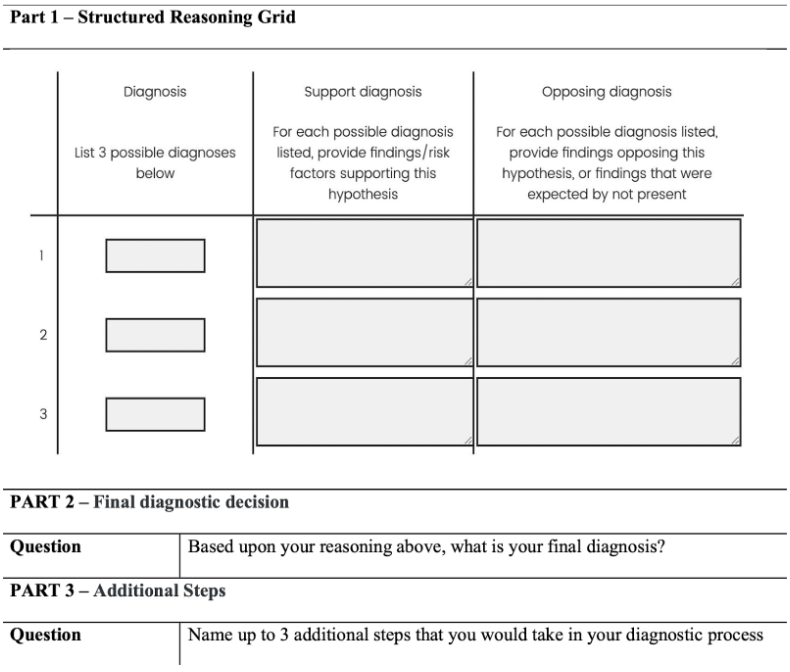

Grading of clinical case eval example

Brodeur et al. 2025: first study comparing LLM to doctors on new emergency room cases

- “We report a real-world study comparing human expert and AI second opinions in randomly-selected patients in the emergency room of a major tertiary academic medical center in Boston, MA.”

- “We compared LLMs and board-certified physicians at three predefined diagnostic touchpoints: triage in the emergency room, initial evaluation by a physician, and admission to the hospital or intensive care unit.”

- “o1 outperformed both 4o and two expert attending physicians, as assessed by two other attending physicians… The o1 model identified the exact or very close diagnosis (Bond scores of 4-5) in 65.8% of cases during the initial ER Triage, 69.6% during the ER physician encounter, and 79.7% at the ICU —surpassing the two physicians (54.4%, 60.8%, 75.9% for Physician 1; 48.1%, 50.6%, 68.4% for Physician 2) at each stage.”

What can we learn from these studies?

Non-AI-bio hat: can I use LLMs to self-diagnose a medical issue?

- Are you happy with 60-80% accuracy?

- In most of these studies (except HealthBench), the LLMs are given the nurse’s insights or medical context a layperson might not provide:

- In Brodeur et al. 2025, input data included:

- nurse-recorded data includ[ing]… chief complaint, presumptive diagnosis, triage nurse note, acuity number, means of arrival, and initial vitals.

- the provider’s history of present illness, physical exam, medical decision making, imaging reports, and labs were collected… attending attestation history of present illness, physical exam, and medical decision making was also captured.

- In the clinical cases, LLMs are given tons of input context (e.g. 4 pages!). See here for example.

- Conclusion: These studies don’t mean you can use an LLM like a doctor, although a second opinion doesn’t hurt.

AI-bio hat: LLM > LLM + expert?

Last year, [Rodman] co-authored a study in which some doctors solved cases with help from ChatGPT. They performed no better than doctors who didn’t use the chatbot. The chatbot alone, however, solved the cases more accurately than the humans. In a follow-up study, Rodman’s team suggested specific ways of using A.I.: they asked some doctors to read the A.I.’s opinion before they analyzed cases, and told others to give A.I. their working diagnosis and ask for a second opinion. This time, both groups diagnosed patients more accurately than humans alone did. The first group proved faster and more effective at proposing next steps. When the chatbot went second, however, it frequently “disobeyed” an instruction to ignore what the doctors had concluded. It seemed to cheat, by anchoring its analysis to the doctor’s existing diagnosis.

AI-bio hat: Did the “real-world” numbers match the clinical case evals?

- If so, can AI-bio deduce something about the correlation between “paper evals” and “real-world studies”?

- Brodeur et al. 2025: o1 was 65-80% correct in the “real world,” comparable to eval results.

- Unfortunately not much can be transferred to AI-bio:

- A paper eval for diagnosis (given lab data/etc.) is reasonably close to the act of diagnosing in the real world. Not as true for acquiring/engineering a bioweapon.

- Differences in these study designs make comparisons difficult.

General comparisons of AI-medicine and AI-bio

Different contexts:

- AI-medicine is mainly driven by medical academia and some startup/industry involvement. The main use case is that doctors (experts) use AI (for liability reasons). The aggregate motivation is to boost AI performance on medical evals (positive capability) with some motivation to preserve medical jobs and some to make products.

- AI-bio is mainly driven by the AI safety community (in civic society (us), AI labs, and government), with some academic involvement. The use cases include novice and expert uplift. The aggregate motivation is to limit bioweapons (negative capability) while not limiting too much.

Many similar challenges:

- Eval design:

- What is a good test? Clinical cases.

- How to establish ground truth, especially given subjectivity? Multiple senior doctors (experts) write ground truth.

- How much help to give in the prompt? A lot, because doctors in big hospitals today get a lot of info.

- Differing results on “who’s better: AI, expert, or expert+AI” depending on study design

- Use of rubrics and baselining

But some differences:

- AI-medicine has many existing exams and documented clinical cases which lent themselves naturally to producing evals. Not true in AI-bio.

- They’re doing manual rubric grading; we’ve moved to auto-grading.